Weak vs. Strong AI: Unraveling the Myths

Weak vs Strong AI – Unraveling the Myths

You prefer to listen to this article? Click here to access our AI-generated audio version:

Weak vs Strong AI – Unraveling the Myths

Artificial Intelligence has become an integral part of our daily lives, from virtual assistants like Siri and Alexa to recommendation systems on Netflix and Amazon. However, there remains a significant misconception that AI can do more than humans, including possessing human-like understanding and reasoning abilities. To clarify these misconceptions, it’s essential to distinguish between “weak AI” and “strong AI,” the two paradigms within AI research.

Weak AI vs. Strong AI

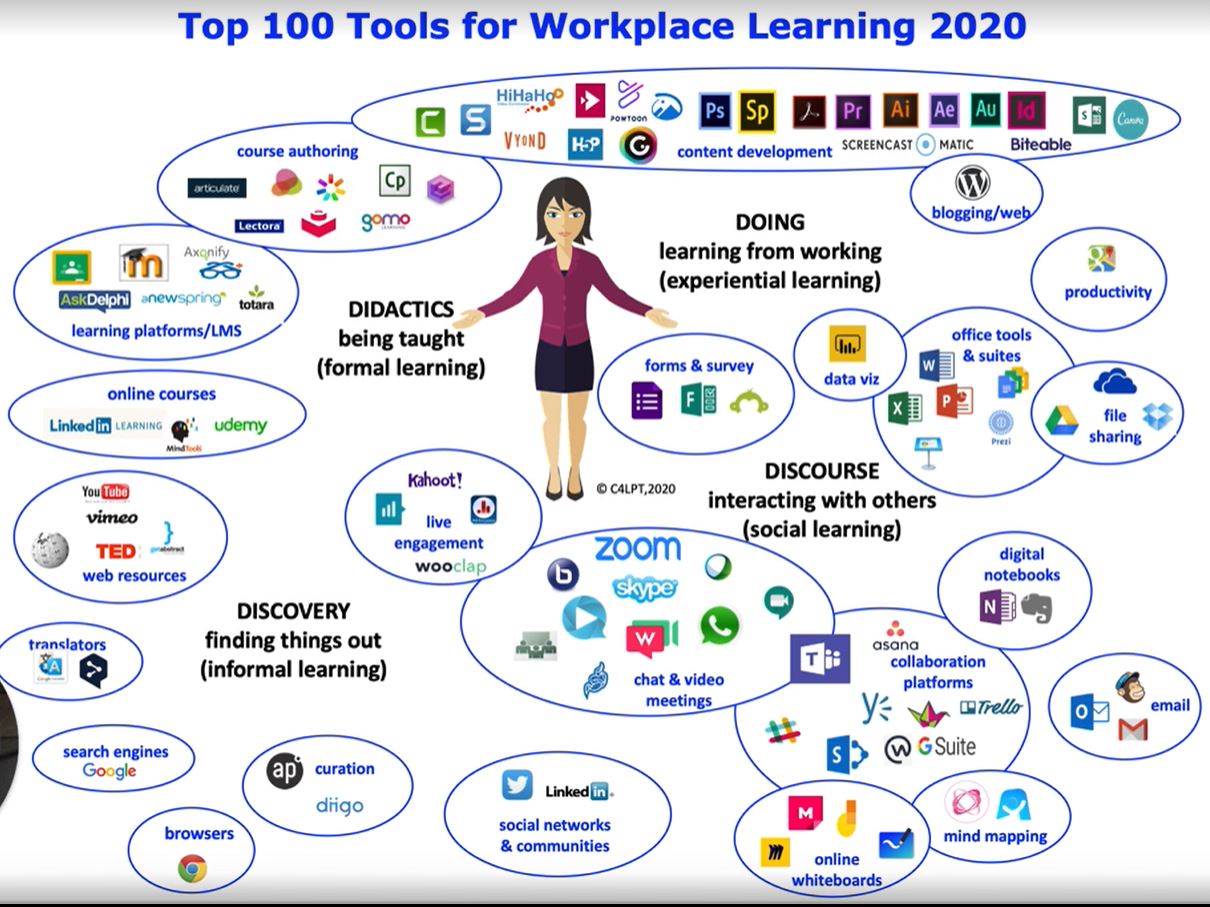

Weak AI, also known as narrow AI, is designed to perform specific tasks such as image classification, game playing, or speech recognition. These systems excel at their designated functions but cannot understand or infer the underlying causes behind the data they process.

They operate based on pre-defined rules and large datasets, showcasing behavioristic characteristics rather than true cognitive processes. For example, deep learning, a subset of weak AI, uses artificial neural networks to identify patterns and make predictions but does not possess true understanding or consciousness.

In contrast, strong AI, or artificial general intelligence (AGI), aims to replicate human cognitive abilities. This includes reasoning, understanding, and the ability to learn from experiences like humans. Strong AI is not just a tool for problem-solving but is envisioned to have actual mental states, consciousness, and the capacity for imagination.

Despite its theoretical appeal, strong AI remains an elusive goal, as current AI systems lack common sense and the ability to comprehend hidden processes behind the data they analyze.

Misconceptions about AI Capabilities

One major misconception is that AI can surpass human capabilities in all aspects. While AI systems can outperform humans in specific tasks, such as playing chess or Go, they do not possess the holistic understanding and cognitive flexibility inherent to human intelligence. Current AI, intense learning models, are adept at processing vast amounts of data to make predictions but do so without understanding the context or causality behind the data.

Moreover, the myth that AI is “all algorithms” oversimplifies its complexity. AI systems rely not only on algorithms but also on high-quality data, effective user interfaces, and interdisciplinary research. Reducing AI to just a set of algorithms ignores the broader context of its development and application.

The Practical Implications of Weak AI

Despite the limitations of weak AI, its practical applications are profound. Weak AI systems provide scalable, efficient solutions for handling big data and have become ubiquitous in various industries. From spam filters and recommendation systems to voice assistants and navigation tools, weak AI enhances our daily lives by automating and optimizing routine tasks.

The Elusive Goal of Strong AI

Strong AI, with its promise of human-like cognition and understanding, remains a topic of extensive research and debate. Some argue that to achieve strong AI, future research should focus on developing compositional generative predictive models (CGPMs) that mimic the human brain’s ability to infer and understand the causes behind sensory inputs.

However, the realization of strong AI poses ethical and practical challenges, including the risk of manipulation and the need for these systems to align with long-term, homeostasis-oriented purposes. However, the development of strong AI is still a distant goal.

Conclusion

Understanding the distinction between weak AI and strong AI helps dispel common myths about AI capabilities. While weak AI systems provide valuable tools for specific tasks, strong AI remains a theoretical concept, far from realization.

Acknowledging these differences is crucial for setting realistic expectations and guiding future AI research and development. As AI technology continues to evolve, it is imperative to focus on ethical considerations and ensure that these advancements serve to enhance human potential rather than overshadow it.

Butz, M. V. (2021). Towards Strong AI. Künstl Intell, 35(1), 91–101. https://doi.org/10.1007/s13218-021-00705-x

Flowers, J. C. (2021). Strong and weak AI: Deweyan considerations. Künstl Intell. Retrieved from Worcester State University. jflowers@worcester.edu

Liu, B. (2021). “Weak AI” is likely to never become “Strong AI”, so what is its greatest value for us? arXiv preprint arXiv:2103.15294. https://doi.org/10.48550/arXiv.2103.15294

Nussbaum, F. G. (2023). A comprehensive review of AI myths and misconceptions. Review: AI Myths and Misconceptions (Version: October 31, 2023). Retrieved from frank@fgnussbaum.com

Raphaela Pouzar

Marketing Team Assistant

Raphaela Pouzar, a Bachelor of Business Administration student at IMC Krems, works at MDI as a marketing assistant alongside her studies. Additionally, she is currently pursuing an AI certificate from Harvard Business School.

Download our International Whitepaper: